Practisim Designer - AncientSky Games

- New Functionality

- Personal inventory of Props. Pick items to add or remove to list

- Rearrange personal inventory by dragging

- Personal inventory of Props. Pick items to add or remove to list

- New Props

- Airsoft Challenge Targets & no shoots

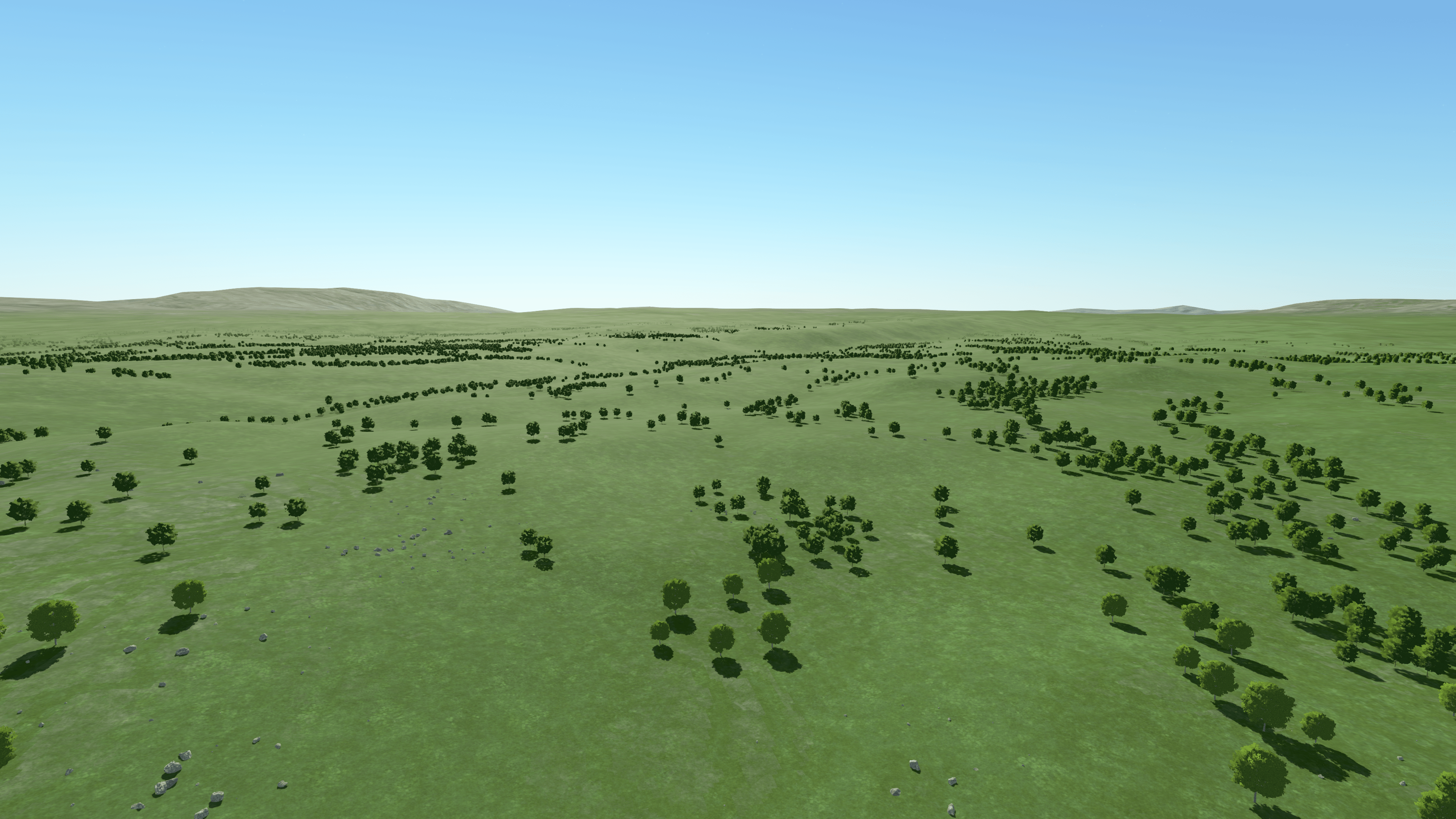

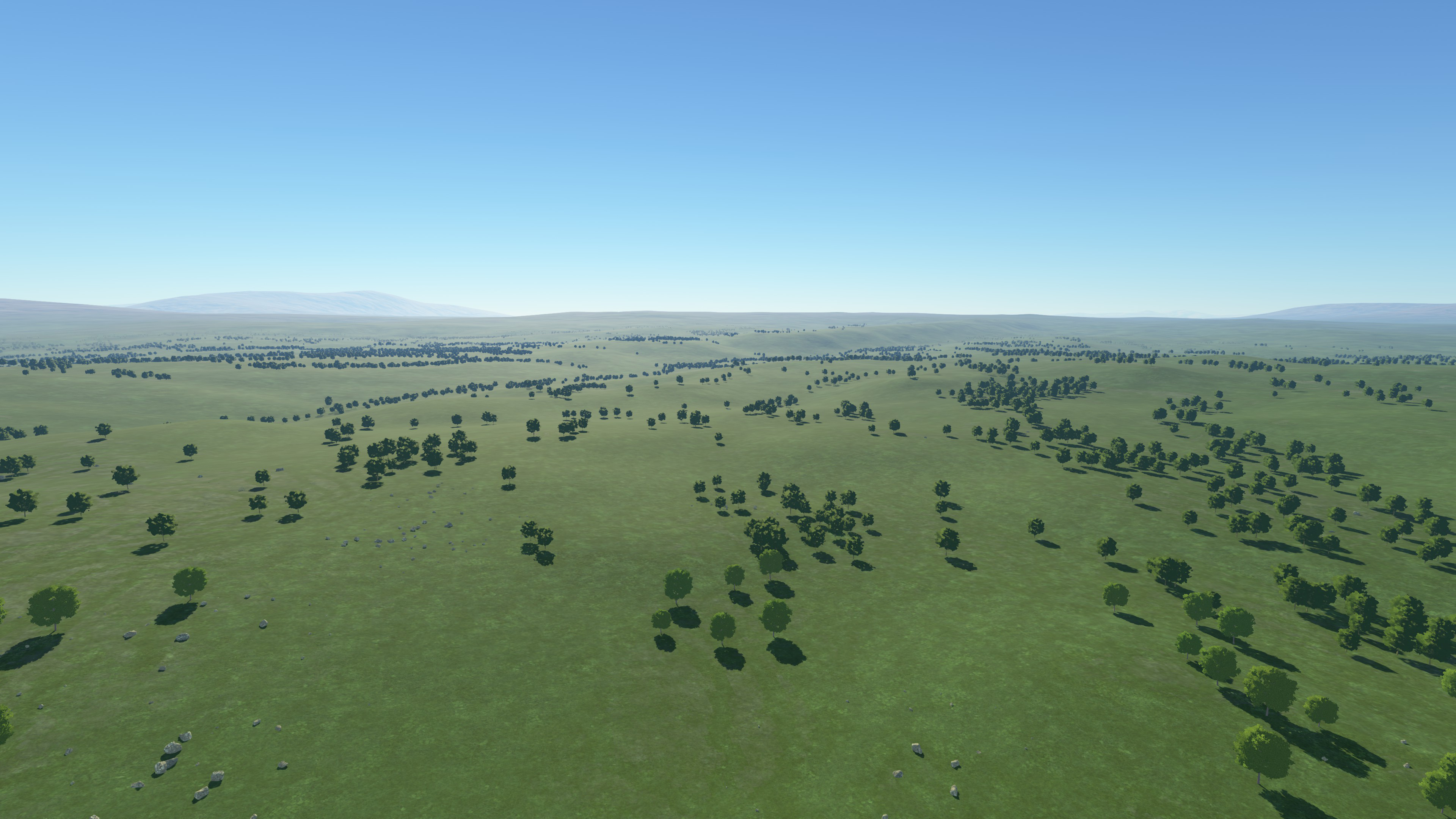

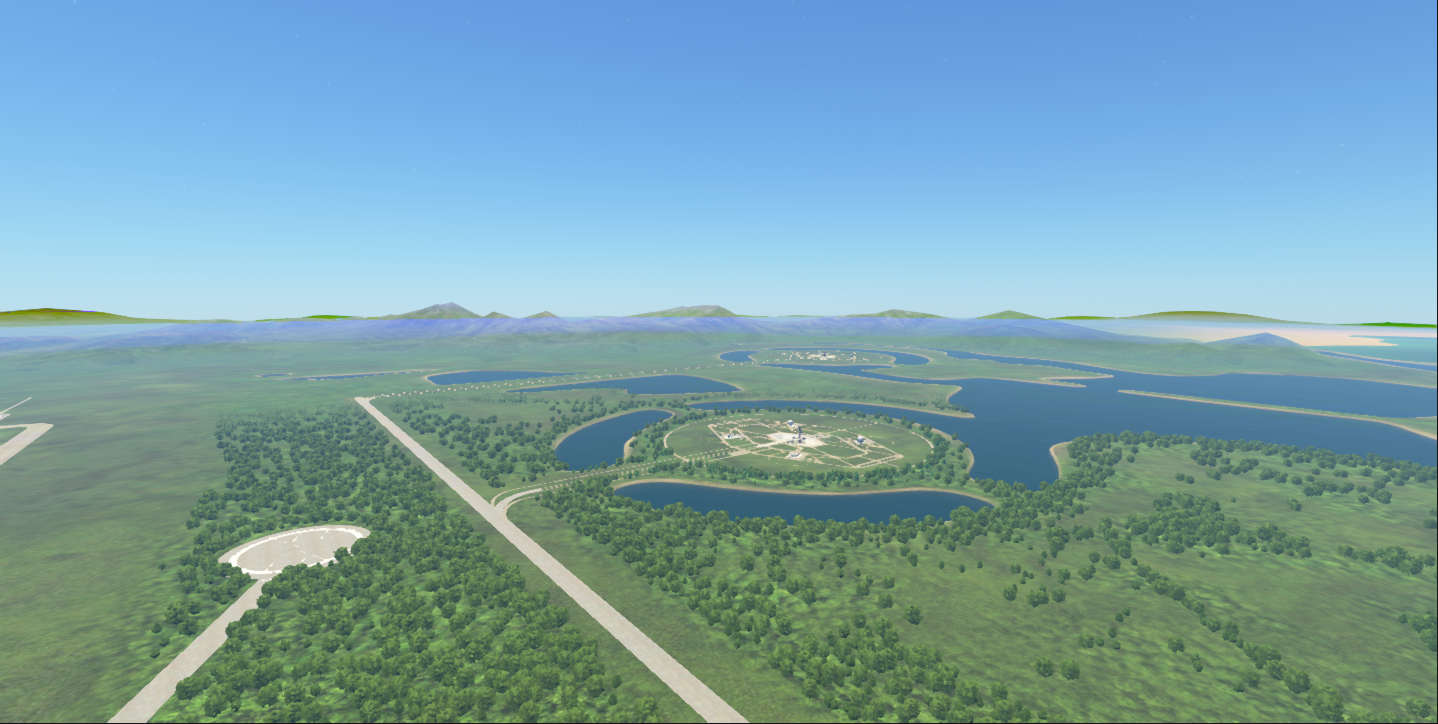

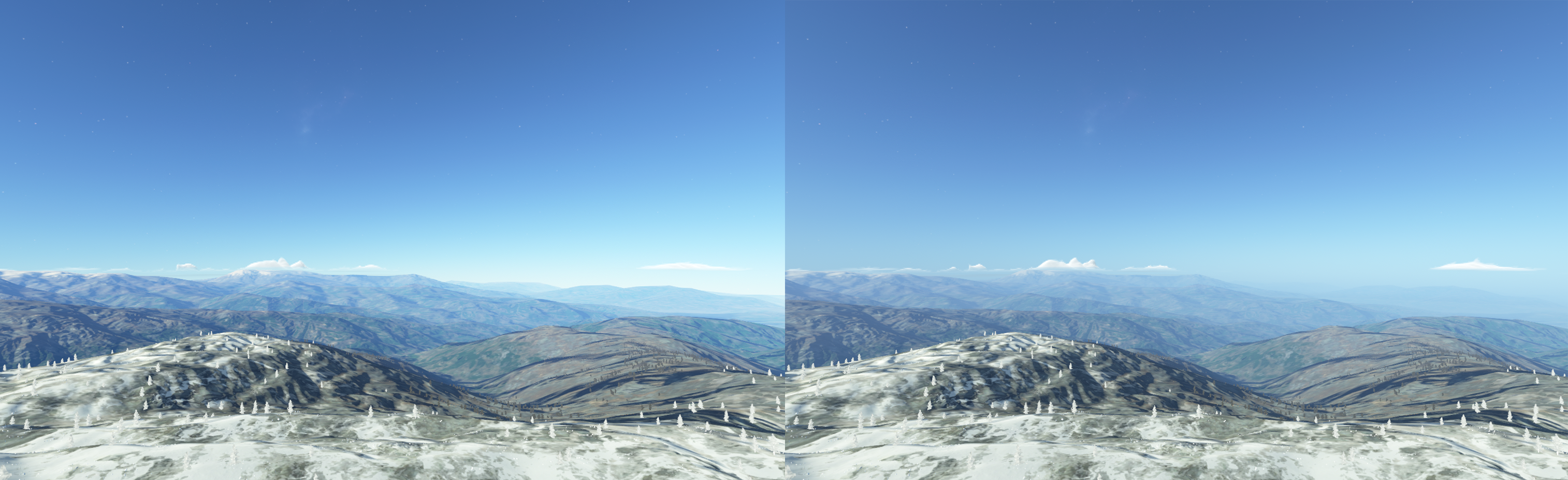

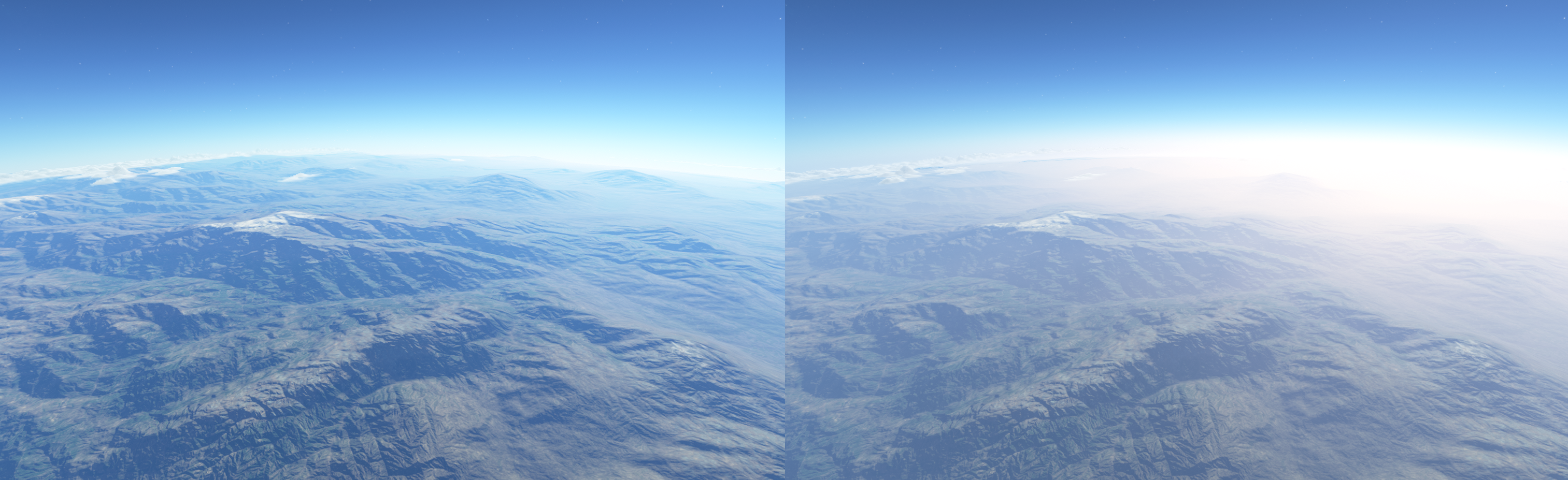

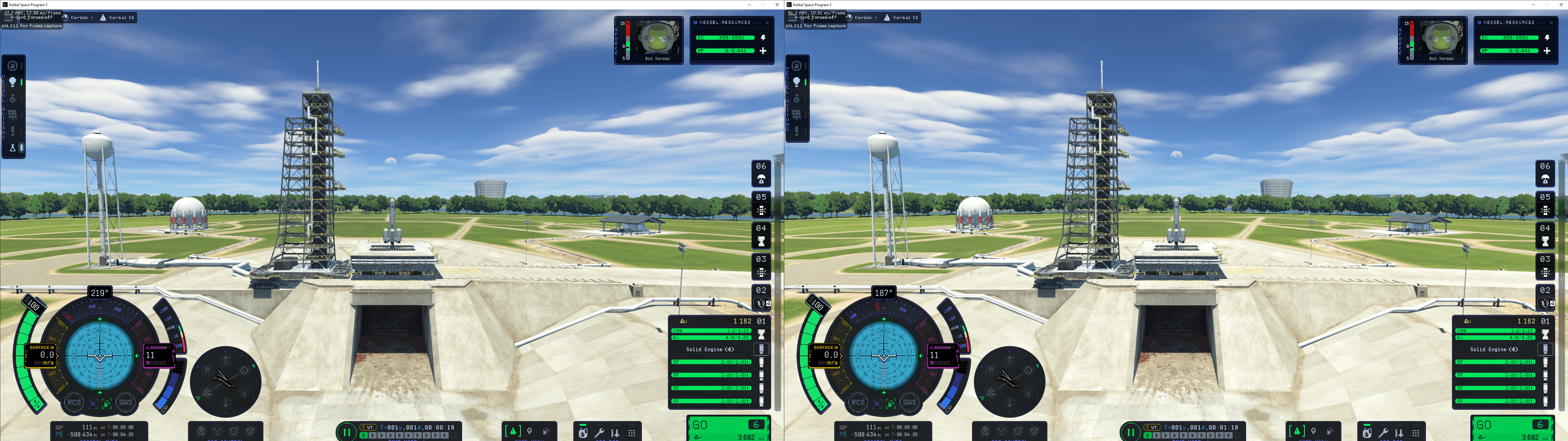

On the launchpad we went from 77 to 91 fps

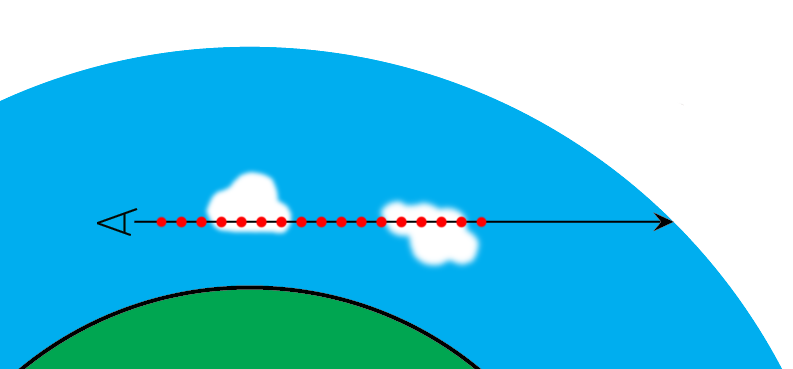

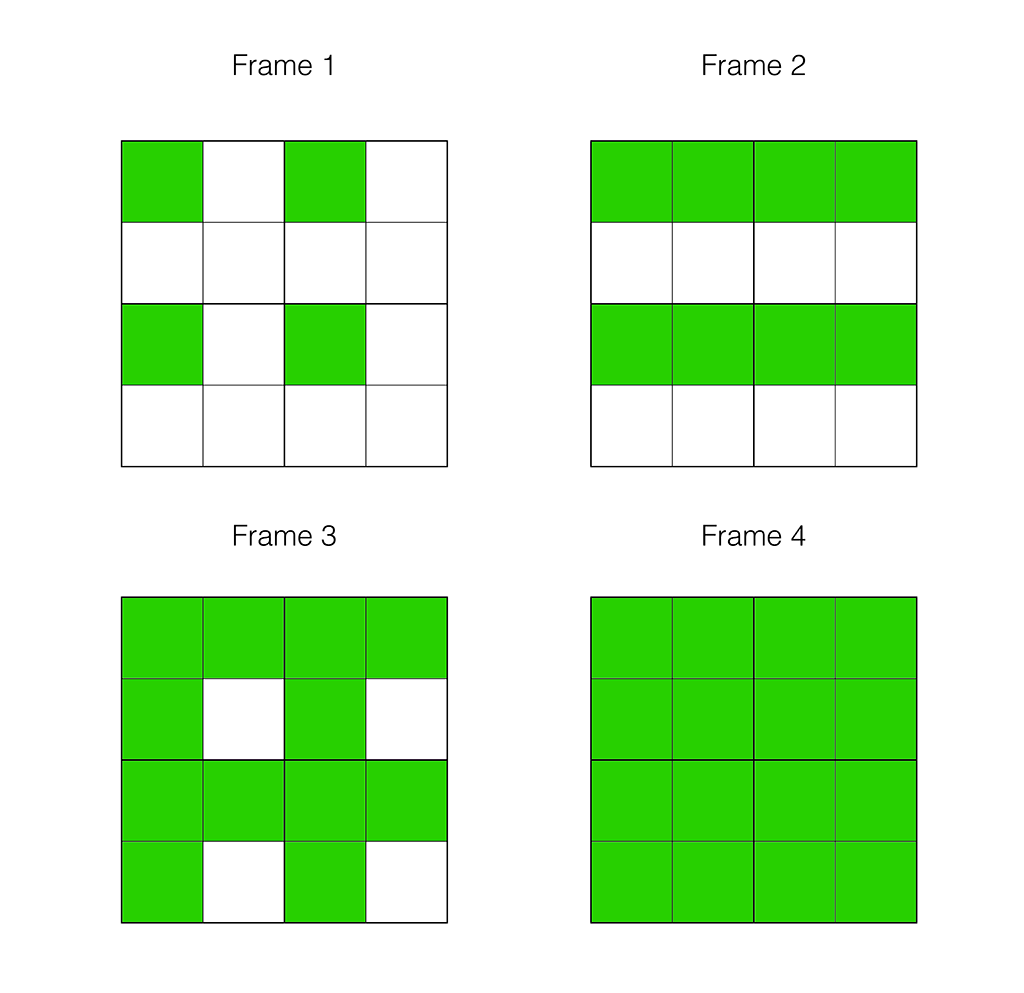

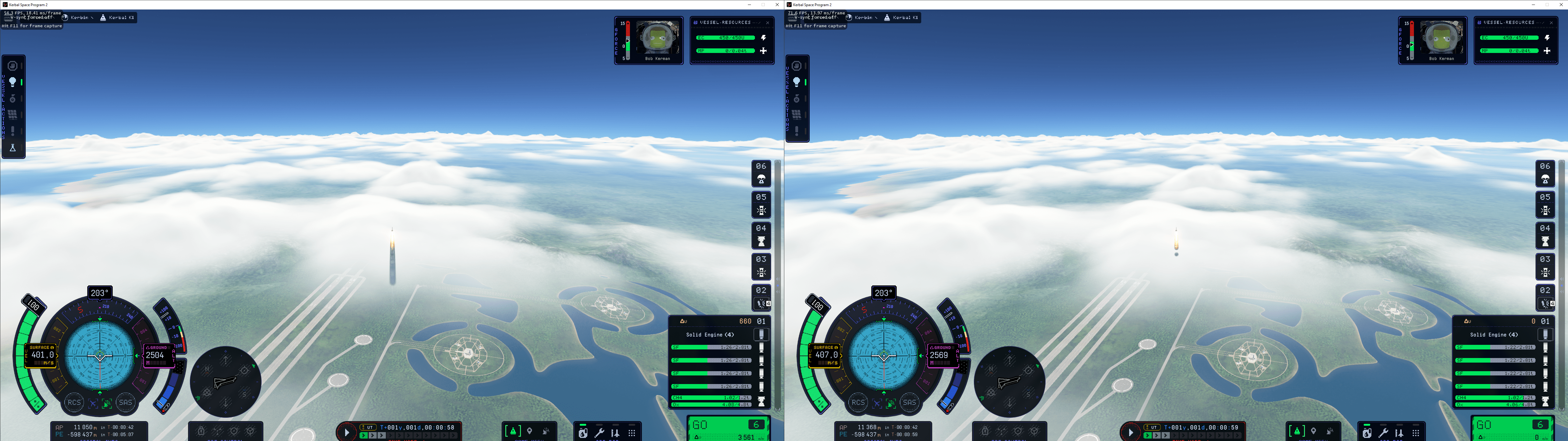

On the launchpad we went from 77 to 91 fps In-flight around the cloud layer, we went from 54 to 71 fps

In-flight around the cloud layer, we went from 54 to 71 fps